"Rumors Spread on Social Media..."

Facebook is directly responsible for violence globally and in American society. Mark Zuckerberg, or Facebook, needs to be held liable.

Hi,

Welcome to BIG, a newsletter about the politics of monopoly and finance. If you’d like to sign up, you can do so here. Or just read on…

Today I’m going to write about a legal theory by Carrie Goldberg, a victim’s rights attorney on the cutting edge of tech platform regulation. Her theory is a useful lens to rethink how we understand tech platforms, and who is at fault for actions that occur after specific user interface and algorithm choices.

First, some house-keeping. I was on Rising with Krystal Ball and Saagar Enjeti today to talk about the potential for a Google antitrust case. The New York Times reported there is internal disagreement at the Department of Justice over when to bring the case. My view is that there hasn’t been a case like this in 20 years, and lawyers always want more time because they are cautious. So Barr’s mandate of ‘you’ve had long enough just file the case already’ is reasonable. That said, Barr isn’t the most credible party here, so there’s that.

And now…

Speech or Product?

One of the more interesting cases around internet law is a stalking case having to do with a 33 year-old actor living in New York City, Matthew Herrick. Buzzfeed reported on it last year. Here’s the story:

At the peak of the abuse Matthew Herrick suffered, 16 men showed up every day at his door, each one expecting either violent and degrading sex, drugs, or both. Herrick, a 32-year-old aspiring actor living in New York City, didn’t know any of them, but the men insisted they knew him — they’d just been chatting with him on the dating app Grindr. This scenario repeated itself more than 1,000 times between October 2016 and March 2017.

Herrick had deactivated his account and deleted the Grindr app from his phone in late 2015 when he’d started dating a man referred to in court documents as J.C., whom he’d met on the app. The two broke up in fall 2016. Soon after, according to court filings, J.C. began stalking Herrick and created fake profiles on Grindr impersonating Herrick… The profiles falsely claimed Herrick was HIV-positive and interested in unprotected sex and bondage. Through Grindr, Herrick says J.C. directed these men to his apartment or workplace, creating a world of chaos for him on a daily basis.

“It was a horror film,” Herrick told BuzzFeed News in an interview. “It’s just like a constant Groundhog Day, but in the most horrible way you can imagine. It was like an episode of Black Mirror.”

Protective orders and police reports against J.C. failed to stop the torrent of harassment. Herrick, his friends, and lawyers submitted 100 complaints to Grindr asking it to block J.C., but they received no response.

Eventually, Herrick, represented by victim’s rights attorney Carrie Goldberg, sued Grindr, using a novel legal theory to address this new form of stalking. “I argued,” Goldberg wrote in a filing to the Federal Communications Commission, “that Grindr is a defectively designed and manufactured product as it was easily exploited if didn’t have the ability to identify and exclude abusive users.” She was making a product liability claim.

Such an argument about a tech platform, even one like Grindr, is unusual, to say the least. There’s bad faith here, some big tech friendly scholars really want the debate to be about how to protect the public square of Facebook and Google from the meddling hand of democracy. But even lawyers and scholars who disdain the pernicious effects of platforms think about technology platforms as facilitating speech. They dislike misinformation, disinformation, fraud, and so forth, but they try to shoehorn claims about the problems with social media, search engines, or matching engines into the legal debate over the first amendment or technology. And it’s a seductive path to go down, since technology is cool, and free speech is such a powerful American norm to argue about.

The desire to argue through the political lens of free speech is further heightened by the biggest magnetic attention draw in the world, Donald Trump. A few months ago, Trump argued that large technology platforms organize themselves by censoring conservative speech. He issued an executive order mandating the government see what it could do to hold tech platforms accountable for controlling speech. Specifically, Trump ordered the Federal Communications Commission to make regulatory changes to a law called Section 230 of the Communications Decency Act, which is a shield useful to large technology platforms, as well as services like Grindr, who used it to defend against Herrick’s claim.

The law is conceptually simple. Section 230 was passed in 1996 to protect the ability of AOL and Compuserve to run chatrooms without having to be responsible for what other people used them for. The law basically ensured that if a user said something defamatory on AOL’s chatroom, the user, not AOL, would be liable. And whether AOL chose to take it down, filter content in a specific way, or keep it up, AOL would be protected by a ‘Good Samaritan’ clause which says that it is allowed to run its service however it wants, and it is never responsible for what third party speakers say. That’s why Google can display whatever search results or ads it wants, or Facebook can organize its algorithm however it wants, and neither corporation can be sued for third party content. Mark Zuckerberg may tell the public he’s responsible for what happens on Facebook, but the law says he isn’t.

Section 230 is understood as the legal cornerstone of digital platforms. There’s even a book titled “The Twenty-Six Words that Created the Internet,” because the key section is just twenty six words long (“No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.”) So when Trump attacked Section 230 with his executive order, tech lobbyists went nuts. But protectors of Section 230 extend far beyond the big tech lobbying world.

The American Civil Liberties Union supports Section 230. So does the liberal group Common Cause, an organization created during the Nixon administration to take on that corrupt President. In Goliath, I showed how liberals were fooled in the 1970s into supporting corporate power, and there’s no better example than how many left-wing organizations support this law as a bulwark of free speech, instead of a liability shield for a particular kind of business model. Common Cause even brought a suit asserting the entire executive order was unconstitutional, the premise of which is that it violates Facebook’s corporate right to free speech. (One wonders if Common Cause still opposes Citizens United…)

Interestingly, free speech and Section 230 was also Grindr’s defense to Herrick’s lawsuit. Grindr argued it is merely a speech platform (or in Section 230 parlance, an ‘interactive computer service’), and if someone used Grindr to put Herrick in danger, well, it’s not Grindr’s fault. Goldberg scoffed at the notion that the case was about speech; Grindr was simply a defective product, no different than an exploding toaster. “If you engineered and are profiting off one of the world’s biggest hook-up apps and don’t factor into its design the arithmetic certainty,” Goldberg wrote, “that it will sometimes be abused by predators, stalkers, rapists – you should be responsible to those you injure because of it.”

The district court, however didn’t agree with the exploding toaster theory. “I don’t find what Grindr did to be acceptable,” US District Court Judge Valerie E. Caproni said, but she ruled for Grindr nonetheless. In the case, Grindr won on the basis that it is an interactive computer service immunized by Section 230, and that it bears no liability for the speech or actions of the stalker. On appeal, the situation repeated itself. The judges agreed with Herrick on the moral argument, but with Grindr on the law. “The whole thing is horrible,” said one appeals judge, Judge Dennis Jacobs. “But the question is, what’s the responsibility of Grindr?” Goldberg eventually appealed it to the Supreme Court, which refused to hear the case. It’s not a crazy decision, as the stalker is clearly the key responsible party orchestrating the scheme. But there’s also something deeply problematic at work, because it’s evident that Grindr was assisting the bad behavior; Grindr even refused to help Herrick confirm who was behind the fake profiles.

It’s not just Grindr that induces such problems. Other services, like Facebook, YouTube, Google search, Twitter, etc can place people in imminent danger, facilitate libel, foster housing discrimination, enable the sale of counterfeit or defective products, or organize a host of fraudulent activities. I went over a some of these problems when I found Chinese scammers using advertising on Facebook to sell counterfeit Rothy’s, a premium women’s shoe brand. A reader of BIG told me she didn’t realize she was buying counterfeit shoes, and meanwhile Rothy’s noted they couldn’t get Facebook to take the ads down.

In fact, Section 230 has become the shield for swaths of corrupt activities; Facebook can’t be held liable for enabling with Grindr did, for the same reason, because it is merely an ‘interactive computer service.’ Like J.C.’s impersonation of Herrick, scammers use Facebook to impersonate soldiers so as to start fake long-distance relationships with lonely people, eventually tricking their victims into sending their ‘boyfriends’ money. Soldiers are constantly finding fake profiles of themselves, and victims are constantly cheated in heart-breaking ways. The military is helpless to do much about this, the power to act is in Facebook’s hands.

In other words, Section 230, and the law governing the structuring of platforms on the internet, creates a very weird kind of politics. In some ways it puts victim rights lawyers and Trump on the same side against liberal groups and big tech monopolies, though the debate is in fact much more scrambled. Trump isn’t wrong to critique Section 230, but his argument about conservative bias is, putting accuracy aside, besides the point. It’s not that there aren’t free speech issues at work, but the underlying problem is that the law creates an incentive for corrupt business models.

Is a Slot Machine a Platform for Speech?

Thinking of Facebook, Grindr, or Google as products or as communications networks, instead of as the public square, makes a lot of sense. Section 230 was created at a time when people distinguished between the offline and online world. But today, it makes no sense to distinguish between internet services and the rest of the economy. Convergence isn’t happening, it has happened; Amazon owns warehouses and massive real estate holdings, Walmart has a thriving online marketplace, and Google and Facebook both operate large data centers and undersea cables.

Moreover, data and information services are increasingly analogous to physical products. A slot machine is a slot machine, whether it has a physical lever or a browser. Internet services, such as video games and social media, can have addictive qualities with physiological characteristics similar to narcotics or gambling. As former Facebook executive Sean Parker noted, social media executives knowingly took advantage of these characteristics in product design.

Moreover, while it’s easy to blame the individual, there’s a lot of research that overuse of these services can result in depression, memory loss, alcoholism, and reduced empathy and social development. Even the basis for assuming that colors, formatting, and content are merely speech is falling apart; a judge recently ruled that a graphic sent to a writer via Twitter designed to trigger (and that did trigger) an epileptic seizure in the recipient was assault. It makes increasingly less sense to characterize the wide variety of available digital goods and services, or goods and services with embedded digital capacity, purely as speech whose transmission is covered by Section 230 of the Communications Decency Act.

And if that is so, then we need a different framework to understand just what these things are. In one sense, Facebook is a dominant communications platform that should be subject to public utility regulation and antitrust law. But I also think that standard product liability theories should apply. If a product causes harm to the customer that the customer did not expect, and the producer could reasonably see that it would do so, then the product is defective and the producer is liable for that harm.

Goldberg’s legal theory is clever, but it hasn’t triumphed the one time it has been tested. Still, it’s hard to imagine that the legal barricade against recognizing that digital products can be defective and harmful can hold up for that much longer. It is just reality that these platforms impact people. Parents regulate ‘screen time’ for their kids because they know that these services are toys and products as much as they are platforms for speech. And this is more true for Silicon Valley creators of these products than it is for most Americans; Steve Jobs didn’t let his kids touch iPhones or iPads. The law will have to catch up.

Do Facebook Products Create Rumors of Violence?

All of which brings me to the violence and strife occurring in the United States and around the world. Right-wingers and left-wingers each want to blame the other side for what is happening, with allegations of ‘anarchy’ and ‘antifa’ tossed out as easily as ‘white supremacy.’ It is a scary situation, as guns are now involved. But I think what’s important to recognize is that it is too easy to see what is happening as some unique Trump-specific episode, instead of part of a pattern of strife induced by social media products.

A few years ago, the United Nations noted that Facebook, after introducing its service all across Myanmar, had helped foster a genocide in that country. The country did not have much internet access until 2012, and then people began getting cell phones. In Myanmar, Facebook is the internet. The vast majority of the country is Buddhist, but a minority are a Muslim group known as Rohingya. The Myanmar military and police helped encourage mass killings, rapes, and arson against Rohingya in 2016-2017 (as well as more recently). Rumors on Facebook were a key vector for spreading violence.

It’s pretty well-accepted that Facebook was at least in part responsible for what happened. And that’s not just by Facebook’s critics; Kara Swisher asked Mark Zuckerberg about the situation, if he felt responsible for deaths that Facebook helped induce, and he said “I think that we have a responsibility to be doing more there.” When pressed, he even used product liability language, saying, “I want make sure that our products are used for good.”

Zuckerberg is an engineer, and he makes products, not media, and these products have foreseeable effects, which is to say, they get people to argue with one another and spread rumors. Civil society groups in fact complained to Zuckerberg that, as with Grindr refusing to stop the use of its platform to place someone in imminent danger, his product was being used to spread “vicious rumors,” placing Rohingya at risk. Myanmar is just the most egregious example. Facebook induced ethnic divisiveness in Bangladesh, and did so in Sri Lanka as well, helping to foster false rumors that there was a “Muslim plot to wipe out the country’s Buddhist majority.”

In fact, the accelerated spread false rumors of impending violence are happening everywhere there are Facebook products. Take this story in India: Rumors spread on social media fuel deadly India mob attacks:

There was no truth to the rumors, police say, but fear ignited by those messages led to the brutal deaths last week of two Indian tourists, the latest in a wave of mob attacks fueled by social media rumors that have left well over a dozen people dead across the country.

Now that being said, it’s easy to overstate the point. Facebook isn’t bombing people, it is merely a social media product that others use to foster harmful rumors, most of which amount to nothing. I don’t buy the argument that people are zombies controlled by internet deities who have evil plans for all of us. It’s also critical to recognize that Facebook, and other social media products, are merely widening social fissures that already exist in societies. But as with Grindr refusing to do anything about J.C., pretending Facebook had no role here is wrong.

Think about it a different way. If someone told me that a group of people were coming to my house to kill me and my family, and I weren’t sure if it was true or not, I’d sure want to defend myself. I’d be afraid. And most importantly, and this is the key point, I’d pay attention. You see, it’s not that Facebook is trying to produce violence, but Facebook is trying to get people to pay attention, because that’s what it sells, our attention to advertisers.

Facebook has organized a user interface and a set of algorithms that promotes content that is most likely to keep people paying attention to Facebook, in order to sell more advertising. And the false rumors, and resulting violence, is a side effect of the need to sell advertising; it is a product design feature.

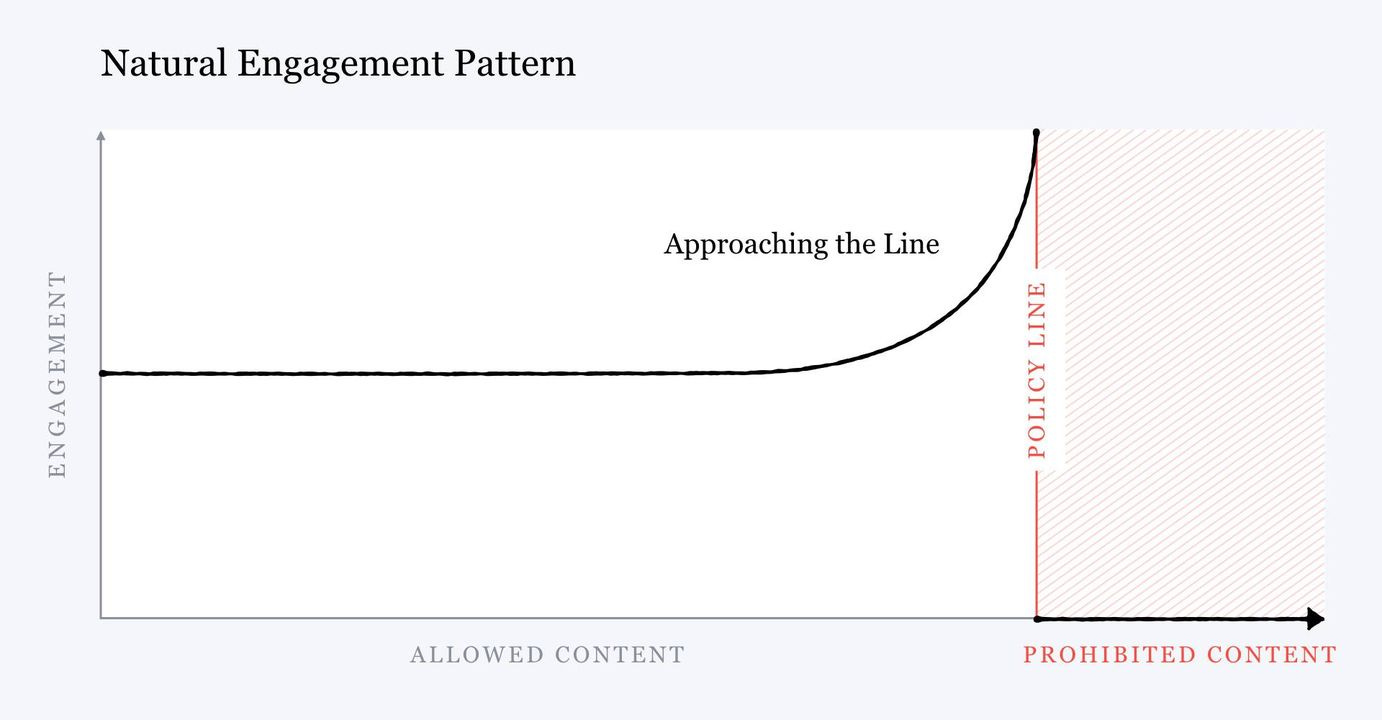

Zuckerberg noted this very dynamic in 2018, writing that “one of the biggest issues social networks face is that, when left unchecked, people will engage disproportionately with more sensationalist and provocative content.” This graph accompanied his post.

Facebook’s product, in other words, is defective, because while I might sign up for Facebook to stay in touch with friends and family or get access to the internet, the unexpected result is that I get addicted to a service that gives me false information about potential violence. In a society with a highly functional social fabric, Facebook will cause annoying arguments with family members, and perhaps make politics a bit meaner. But the weaker the governance system, the more likely it adds to potential violence.

One objection to this line of thinking is that, well, if people are just going to respond badly to social media by spreading rumors, then putting liability on Facebook would result in no more social media. I find this a very unlikely outcome, but if it is true that there is no way to build a social media product without enabling genocide, then maybe social media shouldn’t exist. What would probably happen with a different standard is that corporations would be far more careful in testing and launching digital products. Product architects would develop a taxonomy for what is dangerous and what is safe, and platform design would head in a much healthier direction.

Now let’s return to domestic American strife. Take a look at these headlines, and what I’ve bolded.

U.S. political divide becomes increasingly violent, rattling activists and police, Washington Post

But when rumors spread on social media that “busloads” of residents from Dallas and Fort Worth would be descending on the town to tear down the Confederate monument, hundreds of predominantly White conservative counterprotesters and members of armed conservative groups descended on the site, many heavily armed.

Hundreds Of People Show Up For St. George Protest — Based On A Rumor, NPR Utah

After rumors spread on social media that buses of antifa protesters would be in St. George for a Black Lives Matter event Saturday night, several hundred people gathered downtown with guns, American flags and Trump signs. Those rumors turned out to be false, but that did little to stop people from showing up. A small group of Black Lives Matter supporters also showed up and held signs nearby, though it wasn’t organized by the official Southern Utah chapter. There were tense standoffs between the groups at times, but no major issues arose.

Show of force, dozens of arrests mark quiet night in rattled Minneapolis

Crowds gathered quickly as rumors spread on social media that officers had killed him. Authorities almost immediately released video from city cameras showing that the death of Sole, who was Black, was a suicide. But by then the destruction had begun.

Unrest grips downtown Minneapolis; mayor declares curfew, governor activates National Guard

According to the Star Tribune, an unidentified man wanted in connection to a fatal shooting killed himself as police closed in, but rumors spread rapidly the man’s death had been at the hands of police.

Despite the immediate release of surveillance video of the incident by police, widespread looting and rioting erupted, forcing Walz to declare a state of emergency in the city and mobilize the Minnesota National Guard, the Star Tribune reported.

Members of Until Freedom plan to stay in Louisville at least through the Derby

Pinto said the group wants an update in the investigation from Kentucky Attorney General Daniel Cameron. However, Cameron recently said he's still waiting on information from the FBI.

Rumors spread on social media leading up to Tuesday about what could happen, and some schools even closed as a precaution and had a virtual learning day. Police and Louisville Mayor Greg Fischer had asked people to not spread misinformation.

Police: No, antifa not sending ‘a plane load of their people’ to Idaho to incite riots

Multiple posts that circulated Monday on social media spread false information claiming that antifascist protesters were planning riots in the Treasure Valley, according to multiple local law enforcement agencies and other officials.

Police Shot A Black Man In Englewood. Then Misinformation Spread Like Wildfire

That false, viral narrative persisted throughout Monday, as activists and community groups took to Twitter, Facebook and Instagram to say a 15-year-old boy had been murdered by police.

U.S. Conflict Is Part of a Global Trend

If you are a liberal Democrat, the obvious villain here is Donald Trump and his various conservative outlets, like Fox News. If you are a conservative, it is Democrats and MSNBC. But take a step back; once you get rid of the American accents, the faint echo of Myanmar or Sri Lanka is unmistakeable. These social media products have a design defect, and that defect is playing out in our streets.

Now of course, we can’t let the direct perpetrators off the hook – people are doing violent or dangerous things, and those people are at fault for what they are doing. In the case of dating apps, well, Grindr didn’t create stalking, harassment, or impersonation, and people were victimized by ex-boyfriends in violent and horrible ways before J.C. turned Matthew Herrick’s life into living hell. However, Grindr made it possible for J.C. to engage in a particularly frictionless form of torture, and refused to provide victims the ability to stop the harm. That makes Grindr an accomplice, or at least, deeply negligent in its product design.

Similarly, Mark Zuckerberg didn’t create authoritarianism, sectional strife, or our particularly robust protest and gun culture. But Facebook and social media has had the foreseeable effect of creating a vector to spread fear and violence in the form of false rumors. We know this because it is happening all over the world, and it is happening in many different contexts. The specific cultural factions which commit violence against one another differ by country, as does the content of the false rumors, but the products doing the spreading, and that the rumors are both false and include threats of violence, are the same.

I don’t know if product liability is the right legal theory to use. I imagine that we’ll have to use a whole suite of laws, from break-ups to common carriage regulations, to take on the power of big tech. But the point of thinking about Grindr from a product liability standpoint is that we have to place the locus of responsibility for harm on the those who build and sell the products that cause harm. If we don’t, then harm will spread, because it’s cheaper and easier to build defective products when other people are bearing the cost. That’s what we saw in Myanmar. And it’s what we’re seeing in the streets of the United States in 2020.

Meanwhile, Myanmar genocide and civil strife in America notwithstanding, Mark Zuckerberg, because he is able to externalize the costs of this social dysfunction onto others, is worth $100 billion. After all, Zuckerberg gets to sell tickets to watch the end of the world.

Thanks for reading. Send me tips, stories I’ve missed, or comment by clicking on the title of this newsletter. And if you liked this issue of BIG, you can sign up here for more issues of BIG, a newsletter on how to restore fair commerce, innovation and democracy. If you really liked it, read my book, Goliath: The 100-Year War Between Monopoly Power and Democracy.

cheers,

Matt Stoller

Thanks for the thoughtful article, Matt.

I have a couple concerns about your suggestion that we repeal Section 230 of the CDA (if that is in fact what you are suggesting).

First, what gives you the confidence that the current members of congress will be able to effectively regulate speech on the internet? They are currently woefully ignorant about many details of technology (as evidenced by the Zuckerburg hearing) and standardized speech guidelines at the scale of a platform like Facebook are a totally open problem— it would be implausible to borrow from, say, how the FCC regulates radio because of the volume of online content.

Second, you use the example of strangers impersonating soldiers to attract women online. Would you feel very differently if the same type of con was done via mail? Do you think the postal service should be held responsible for the bad content that it was delivering? I'm sure you will disagree with the analogy, but I'd love to see why exactly, because to me (who is not at all a fan of Facebook) I have to recognize that social media is more akin to a public online square than say a radio broadcaster or a magazine. (And thus that Section 230 makes sense).

Thanks again.

Hi Matt, I recently found this: Back in 2012 Baen books increased its ebook prices as a direct result of signing a contract with Amazon. http://teleread.com/baen-inks-deal-with-amazon-makes-major-changes-to-webscriptions-and-free-library/index.html

"Prices for backlist e-books will be going up, too; instead of $6, e-books of books whose print edition is currently hardcover will be $9.99, trade paperback $8.99, and mass market paperback $6.99."

The price increases did result in increased royalties for the authors (and presumably increased profits for Baen itself), so this wasn't solely due to Amazon's middle-man take. Though whether the price increases would have been as significant without Amazon's requirement that they not be undersold elsewhere online is the question.